Improving access to supercomputers to boost meteorology, motor design and disaster reduction

Europe’s high-performance computing (HPC) capacity will soon reach exascale speeds(opens in new window), with systems more powerful than 1 million of the fastest laptops. This will allow a wide range of next-generation innovations. Today’s data-intensive tasks, such as weather forecasting, are dependent on computationally heavy workflows combining modelling and simulations and new data analytics along with artificial intelligence (AI) large language models. Current workflows and associated tools, designed for use in less powerful supercomputers, will probably need to be adapted for these new machines. “The programming models and tools of the applications underlying many current workflows are different, so we need to simplify how they work together,” explains Rosa Badia from the Barcelona Supercomputing Center(opens in new window) and coordinator of the eFlows4HPC(opens in new window) project. The project has called their approach ‘HPC Workflow-as-a-Service’ because their software solution, hosted on a user-friendly platform, seamlessly integrates various applications. “Our approach – which was not possible before – has simplified access to HPC systems, opening them up to non-expert users, spreading the scientific, social and industrial opportunities of supercomputing,” says Badia. So far, over 30 scientific publications(opens in new window) have been generated using this approach, alongside a policy white paper(opens in new window) on natural hazards.

Under the bonnet

The eFlows4HPC project added new functionalities to existing tools, supporting larger and more complex HPC workflows, and cutting-edge technologies such as AI and big data. At the solution’s heart lies a series of software components, collectively known as a software stack, that implements HPC-based workflows, from start to finish. Firstly, the workflow’s various applications – such as simulators, data processing and machine learning predictions – are developed using the PyCOMPSs programming environment(opens in new window). Data pipelines are also set up to coordinate how workflow data is managed, combining these computational and data management aspects of the workflow. Finally, everything needed to run the software is encapsulated in a container file and installed on the HPC system. Users can then run their workflows using a dedicated interface.

Testing with diverse user communities

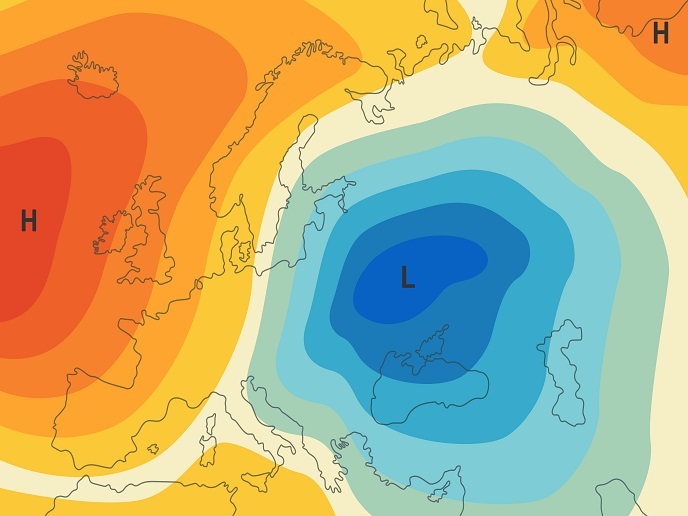

To demonstrate the potential of their approach, the team conducted three use-case trials with very different user communities. The first use-case trial created is a digital twin of manufacturing processes and assets(opens in new window) which simulates experiments with various research and development scenarios cost-effectively. For example, a project partner, CIMNE(opens in new window), collaborated with Siemens(opens in new window) to produce a model of an electric motor able to avoid overheating. As this partnership produced encouraging results, CIMNE now intends to create a spin-off company to exploit these outcomes. The second focused on Earth system modelling(opens in new window), using two AI-based workflows to increase the efficiency of both Earth system models(opens in new window) and prediction models for North Pacific tropical cyclones. These simulations could ultimately help improve global early warning, adaptation and mitigation systems. With both workflows returning positive results, extensions are now in use within the interTwin project and DestinE(opens in new window) initiative. Lastly, workflows to prioritise access to supercomputing resources known as ‘urgent computing’(opens in new window) were developed (likely for the first time in Europe) to predict the impact of natural hazards, particularly earthquakes and tsunamis. “Thanks to the dynamic flexibility of our solution, extensions of these workflows are now being used in the DT-GEO project, developing digital twins of physical systems to monitor and predict natural hazards,” adds Badia.

A springboard for next-generation workflows

The project was carried out with support from the European High Performance Computing Joint Undertaking (EuroHPC JU)(opens in new window), an initiative set up to develop a world-class supercomputing ecosystem in Europe. All the open-source software developed by the project, alongside the demonstration workflows, have been made freely available(opens in new window). “We encourage any user community to access the software and start collaborating to extend its capabilities,” notes Jorge Ejarque, eFlows4HPC technical leader. To ensure the transfer of best practices and results gained from the project, training and workshops were conducted in Barcelona, Munich and Helsinki. The team continues to extend the features and capabilities of all the project’s workflows, focused on AI systems and digital twins.